AI Video Rising

Recent releases of impressive generative video models promise to disrupt video creation

It’s been over a year since ChatGPT was released to the public, triggering an avalanche of AI innovation and potential.

It’s been even longer since the first iteration of text-to-image favorite, MidJourney, entered the market. In its time, advancement of quality and accuracy have improved drastically, going from novel, artifact riddled, robotic images to legitimately awe inspiring creations.

These rapid advances in text and image generation have already changed how people create and do business.

AI Video

With the recent release of several new models by leading companies like Stable Video from Stability AI, Pika 1.0, and RunwayML, AI video is now poised to be the next area of innovation.

Currently, there are a few different methods with which to create AI video, each offering different strengths and applications.

Here is an outline of the different methods with some example experiments:

Text To Video

Text to video is the easiest way to generate AI video and the technique that most people are probably familiar with due to it’s prevalence in the AI image space.

It’s as simple as describing an image with as much detail as possible with text, and then sending it to the model through a user interface for generation.

As with all AI prompting, there are techniques and structures of how to best describe what you want to the system, which can be a bit of an art form in itself.

Generally the more descriptive the better.

Usage and Application

In it’s current state, text-to-video generation works really well for scene ideation and brainstorming as well as b-roll and background videos that don’t require too much detail.

As you can see, the images aren’t always accurate, but so far I am just experimenting and haven’t spent the time to fully refine some of these prompts.

My Examples

Here is an example that I made of a Dinosaur Fighter using the following prompt:

prompt (runwayML): a muscular shirtless human man standing still face to face with a carnivorous dinosaur. They are standing away from each other about 50 yards apart on a open field of dirt and rock. Mid day sun brightly shining behind them as dust floats up from the ground. Side camera view. 8k uhd, dslr, high quality, film grain, Fujifilm XT3It’s not quite a T-Rex, but its an intimidating dinosaur-like creature and captures the drama of the scene.

And here’s another one of a prog metal guitarist shredding arpeggios in 27/8 time on stage made with Pika. This model, and many others up to this point, still can’t get hands and fingers right 🤙😆.

prompt (pika): a mid distance shot of a young metal guitarist playing a guitar solo on stage at a large festival with a crowd in front of the stage and stage lights beaming downTry It Yourself

Below is a hosted model on replicate.com where you can see the usage of the text-to-video technique using the AnimateDiff extension for Stable Diffusion. This extension for the popular image generation model even has different camera movement parameters that you can use to further control the scene.

Replace the current prompt text and click “Run” at the bottom to try it yourself.

https://replicate.com/zsxkib/animate-diff?prediction=q6l4fjlbjb4teeuupiinnfmncu

Image To Video

Image-to-video generation is just that, converting an image to a video. You input an image to the model and then it reads the contents of the image from style, to form, to characters, and uses that data to inform and calculate movement.

Video is only a sequence of images at its core, so the model takes the context of the initial image and generates a sequence of next images that might make sense based on the input.

Usage and Application

This type of video creation is pretty novel at the moment and tends to lose accuracy the longer the video generation is, but it is definitely impressive to see static images come to life!

Image-to-video generation is great for social media content and ideation like live mood boards or storyboard videos to convey feel and aesthetic for final production in music videos or film.

A great workflow for ideation and storyboarding is prompting images in MidJourney or DALLE•3 then bringing them to life with an image-to-video model like Gen2 from RunwayML, Pika, or Stable Video.

My Examples

Here’s an example from an image of myself I created for a previous article (linked below) using a trained LoRA and Stable Diffusion. I took the generated image and ran it through Stable Video to bring it to life (double AI generation)!

And here’s another example of an image that I created in MidJourney for another post brought to life with RunwayML Gen2.

Lastly, here’s a stand off between an AI Muscle Michael and a T-Rex. This is completely generated from the image with no extra prompt using Pika. It is particularly impressive that the model derived the context of the image enough to make me turn my head and look at the T-Rex!!

Try It Yourself

Below is a link to an image-to-video model that uses the new Stable Video Diffusion. Replace the input image and click run to see your image come to life. (default parameters work well, but feel free to tweak for different results)

Video To Video

Video-to-video generation is where you input an existing video and alter its appearance with AI by changing its style, or various aspects of the original video.

This technique is more advanced as you will need quality source video in order to produce the best results. In that lies flexibility and power though, so I view this method as having a ton of potential and contributing to the most practical impact in the near future.

Usage and Application

With this approach, you can still direct a basic scene, using either a traditional approach with a physical camera or a virtual approach with a 3D engine (such as Unity, Unreal Engine or Blender).

In creating the source video you can have complete control over characters and camera, but not have to worry as much about the style of the shot, such as background, wardrobe and even lighting. Then with AI generation, you can later transform character, setting and style into anything by processing the basic shot.

The best way to demonstrate what I mean is in the examples below.

I’m still learning techniques, so my examples aren’t too impressive, but a little farther down, you can see some impressive professional examples to better understand the potential of this technique.

My Examples

In this first example, I downloaded a pre-made, 3D animated character rig from Mixamo and imported it into the Unity game engine where added a simple camera animation.

From there, I exported the basic video and ran it through Gen1 in RunwayML with an input image reference directing the style. (a prompt for style is also an option instead of reference image)

I believe this kind of approach will be immensely powerful for creating highly stylized renders for music videos and films as it continues to improve. I’m exited to continue to play around with and improve this technique for more videos in the future.

Input Image:

Here’s another older video that I did earlier this year using stock footage as the original video, and then transforming the style a bit with Warpfusion. Not quite as impressive, but you can see how it might be useful to alter the style of existing videos.

Try It Yourself?

As mentioned above, this technique is bit more complicated to dive into, but you can grab video from a stock footage site and try running it through RunwayML Gen1 to transform it.

Better Examples from Pro Creators

My examples are a bit crude for now as I was mostly experimenting and didn’t spend a ton of time tweaking prompts and parameters. AI moves exponentially though, so quality and ease of use will catch up quickly based on current capabilities, and as a result, use cases will become more relevant and realistic.

Here are some really impressive examples of AI video made by professional creators using existing models.

Text-to-Video: Sci-Fi trailer

This entire video is made from text-to-video prompted clips in Pika, and then edited together with music.

Image-To-Video: Various Examples

Here is a video containing various impressive image-to-video results from RunwayML Gen2.

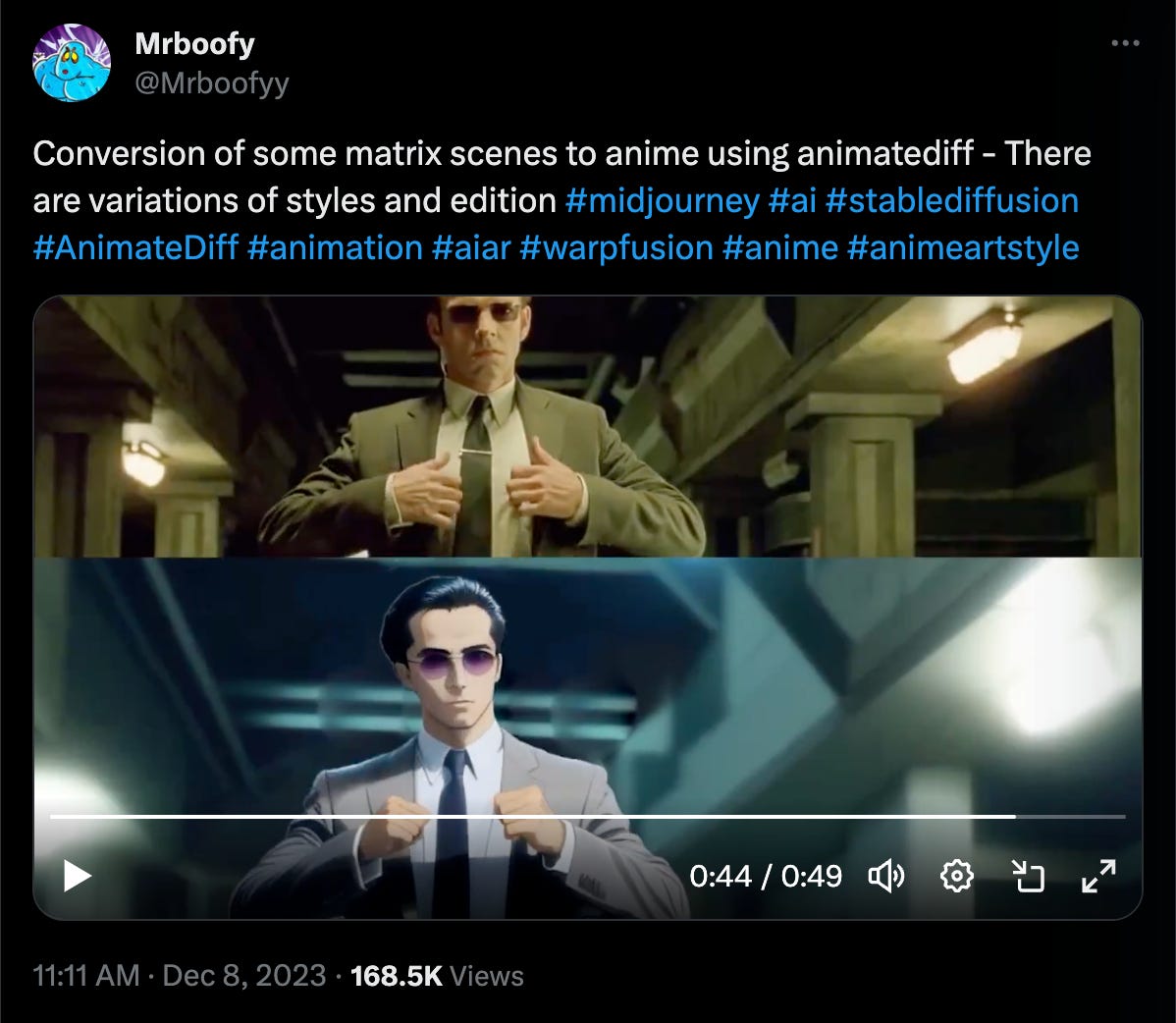

Video-to-video: AniMatrix

This user, MrBoofy, converted an actual scene from The Matrix movie into an Anime version using AnimatedDiff on Stable Diffusion.

AI Video Predictions

The first versions of AI generated video have been pretty rough with a jerky, stutter-like style that most of the internet can recognize by now. Because of the ease of accessibility, it feels like generative AI fatigue has started to set in a bit as more and more content of that style is shared and it’s novelty wears off.

It is my belief that as these tools continue to improve, and new techniques are discovered, we will see better and better quality content created by talented creators and storytellers.

The novelty aspect of “look I entered text and created this!” will fade, and the essence of talent in storytelling and creativity will re-emerge.

In the future I can see creators of all types more easily creating video to accompany their artist story and expression. Think anything from TikTok music videos created by musical artists to Etsy creators featuring physical creations in AI generated story videos, to short films accompanying a podcast or blog story.

There will be a big wave of independent film creators consisting of small teams that will begin to rival bigger studios who have become more and more focused on profit and commercialism than expanding storytelling and art.

There are boundless applications in the commercial space as well. From commercials to product demos, the broader applications of generative AI video are set to revolutionize industries.

Ultimately generative AI is a tool that enhances process and provides new avenues to explore the core of human creativity and storytelling. It will provide independent creators with the ability to explore new limits of innovation without the restrictions of large budgets and studio backing.

And as a result, they will provide audiences with exciting stories and worlds that they have yet to see.

The Strength of Human Creativity

One last mention to touch on a recurring theme within the topic of AI generated content and art: I do strongly believe that the essence of art and expression is very human in nature, and when it comes to artistic connection, nothing can replace the human heart and mind.

More functional and less creative applications and industries will likely be more affected by the emergence of AI, but true creativity is, at its core, a very human trait.

As I mentioned earlier, AI in general is a tool that will serve to augment the vision and and ambition of those who have an idea and the ability to harness this evolving technical power.

As time goes on, novelty will fade and true art will shine through, as it always has.

~ Michael

Exciting Tech of The Week

The AI Video Creation Tools from This Post

I used the amazing tools below for the experiments in this post. They are all in various states of release, with some like RunwayML having an easy to use UI, and others like Stable Video, Stable Diffusion and Warpfusion requiring technical setup to use.

All of these projects are actively iterating new versions that will become easier and easier to use while producing better and better results.

2024 is looking like a big year for AI video, lead by these exciting projects.

RunwayML - https://runwayml.com/

Pika - https://pika.art/

Stable Video - https://stability.ai/stable-video

Warpfusion - https://github.com/Sxela/WarpFusion

AnimatedDiff / Stable Diffusion - https://animatediff.github.io/

My Creative Updates

With the holidays and work on an exciting new AI project that I’ll be announcing soon, my music progress have been a little slow of late. I’m planning on picking up work on the EP again in the new year though, and will share updates as they come.